The goal of a good logistics system is to bring in product, track the product while it is in your possession, and ship it to the customer in a timely and accurate manner. The goal has not changed over the years, but the tools and programs available to perform this task have dramatically improved and will continue to be refined in the future. An inventory-management system combines the use of desktop software, barcode scanners, barcode printers, etc. to streamline the management of inventory—from raw materials, to work in process, to finished goods being shipped. Whether you are tracking inventory used to manufacture a product or reselling a product, using an inventory system provides accountability and minimizes inventory stock-outs and shrinkage. This article will give an overview of what questions one should ask before implementing a new program and how the program should ideally interact with all phases of an operation—from raw material tracking to shipping and delivery of finished goods. The article will focus on Adage ERP by Infor (which has many features common to most systems used by medium-sized manufacturing companies).

A well-designed inventory-control system provides easy access and accurate information based on manual input. If the input is not accurate, the information will not be useful. Good inventory-control techniques and timely cycle counts are critical. Warehouses should be clean and dry (racked preferred) and use a first in, first out (FIFO) inventory (rotation) system that maintains the physical condition of inventory in good condition. All the foundational elements of a solid inventory-control system are necessary for the new computer-based programs to work properly.

When it comes to implementing the new system, you will need a dedicated team with a knowledgeable team leader who is flexible and a problem solver. The people on this team should have hands-on experience to ensure all the operational requirements are covered. The best systems require a well-coordinated team effort.

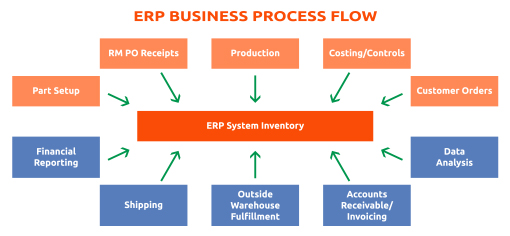

There are hundreds of inventory-control programs available. Most of the better and more comprehensive inventory-control systems work as part of an Enterprise Resource Planning (ERP) system. Some of the most well-known ERP systems are SAP, Microsoft Dynamics ERP, Great Plains (GP), M3 by Infor, and Adage ERP by Infor. All software systems have their strengths and challenges. The goal is to pick the one that best suits your company processes. Consider the following questions when choosing a program.

- Are you a production site or a warehouse operation?

- Do you produce products on a continuous basis or a diverse/one of a kind/short-run process?

- Do you have multiple locations (e.g., satellite warehouses)?

- Are you supplying to multiple job sites in the same area or are they located nationwide?

- Do you need to track inventory shipments or freight?

- Are you a large, medium, or small company?

- How much program support will you need?

- What are your cost limitations?

The above questions will help you find the program best suited and at the right price point for your company.

At our company, Adage ERP has worked well for our needs. It allows us to follow the life cycle of our business from customer setup, pricing, order entry, order fulfillment, scheduling, manufacturing, printing bar code labels, shipping, cycle counts, and invoicing. The heart of any good ERP system is keeping data in the system current at all times and the ability to accurately track the movement of goods while maintaining an accurate inventory through the processing of order receipt and shipment to the customer. Essentially, this entails tracking 2 main functions: receiving (incoming) and shipping (outgoing), which allows you to make smarter inventory decisions. The system has improved our order entry process, reduced understock and overstock situations, eliminated data entry errors through scanning, facilitated efficient cycle counting of the entire warehouse, and given us the ability to share inventory data.

When determining if a specific system is best for you, consider:

- How easy is it to enter a new part number?

- Can the system handle multiple product configurations?

- Can prices be changed easily?

- How much system flexibility do you need?

We also use a system that allows more streamlined functionality with Adage called ASSISTics by A.S.S.I.S.T, Inc. ASSISTics allows us to integrate Adage into our receiving/shipping/tracking capabilities. Customers can track shipments and we can trace products back through the manufacturing process to our raw materials.

Our business outgrew the previous in-house custom designed system. The new system improved our ability to enter orders, respond to customer questions regarding orders immediately (because the information is readily available), and share inventory information between functions in the organization.

The ASSISTics inventory-management system works as follows:

- Order is entered into the system;

- The stock inventory is reviewed to determine promised ship date;

- Incoming raw material barcode is scanned;

- Bin location is assigned for raw material;

- Raw material bar code is scanned and product relieved from inventory as it is used in the manufacturing process;

- Work in process is scanned at each step in the process and eventually scanned into finished goods inventory, at which point a bin location is assigned;

- Inventory is relieved when product is shipped;

- Relieved inventory triggers production schedule, which triggers raw material purchases based on minimum/maximum inventory levels and delivery time from vendors;

- Cycle counts are conducted using bar code scanners;

- If there is a problem in the field, using the lot number associated with the product shipped, we can trace the product back through the process to the raw materials used; and

- Inventory data is updated continuously.

In addition, there are consulting companies that can assist in many of the purchasing, inventory control, and logistics issues associated with any operation. There are also Third Party Logistics (TPL) companies that will support review of your freight bills for overbilling.

Distributors

Inventory control at the distributor level can vary from a manual system to something more sophisticated. A distributor purchases in full carton quantities, but breaks the cartons down and sells product by the piece. In order for the distributor to use a bar code system, each piece would need to be bar coded. Thus, a manual inventory-management system is most common for a distributor. To determine what they should have in inventory depends on the ability of the manager to know his territory (e.g., markets being serviced, local building codes, customer preferences, etc.). Each territory will be slightly different. Success will not be measured by never running out of the highest-selling items, but by never running out of the slower movers that are needed from time to time to finish a job. Inventory is managed by daily or weekly cycle counts for inventory accuracy. Historical data is useful, but changing market conditions and even the weather can affect what is needed on hand at any given time. The key to a distributor maintaining accurate inventory is everyone being disciplined. The greatest and most expensive system will fail without a high level of discipline; it takes a team effort.

Jobsite Inventory Control

Inventory control at a job site creates additional issues not found in a fixed-location site operation. First, there is seldom any space to store materials, even on large jobs. As a result, there may be 2–3 deliveries a day, mostly coming directly from the distributor (more than 80% direct shipments) to maintain a couple days’ worth of inventory of materials on the job site. The contractor will usually hold limited inventory in his warehouse for emergencies or fabrication. Materials coming in will be checked against the pick tickets for accuracy. Later the pick tickets will be checked against the material quoted for the job. Storing the inventory in a clean and dry area can be difficult at times. Often trailers are used for short-term storage. Weather can create issues, either speeding up the installation process or delaying it, causing scheduling changes. The distributor plays a key role in the contractor’s Just In Time (JIT) inventory control–management system. They must be able to adjust to the demands of the job.

Conclusion

The new inventory-control programs offer faster, more accurate information regarding materials on hand and shipment of product to the customer, allowing decreased delivery time and increased fill rates. The systems available are constantly being upgraded to be easier to use and integrated into more processes in the supply chain. These programs would benefit any manufacturing company, no matter the size, in obtaining a broad range of inventory information quickly available to them. For the distributor and the contractor, these type of inventory-management systems offer greater challenges and may not be useful to their current business process. No matter what system you use, do not forget the basic nuts and bolts of a good inventory-control system. It still comes down to the right people making the right decisions to make the system work.

Copyright Statement

This article was published in the September 2016 issue of Insulation Outlook magazine. Copyright © 2016 National Insulation Association. All rights reserved. The contents of this website and Insulation Outlook magazine may not be reproduced in any means, in whole or in part, without the prior written permission of the publisher and NIA. Any unauthorized duplication is strictly prohibited and would violate NIA’s copyright and may violate other copyright agreements that NIA has with authors and partners. Contact publisher@insulation.org to reprint or reproduce this content.